- Client

- VideotronicMaker.com

- Website

- View website

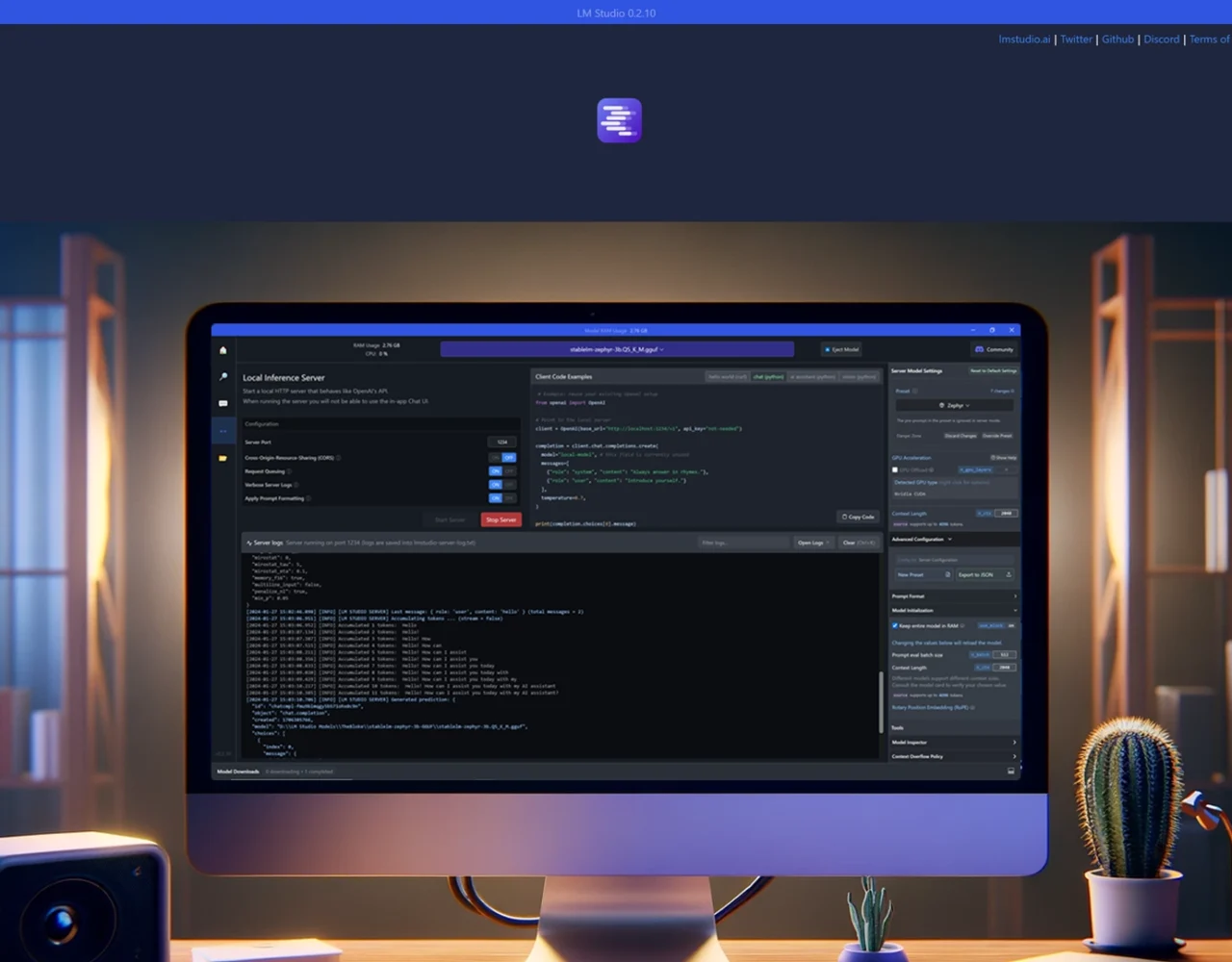

Tutorial on how to use LM Studio without the Chat UI using a local server. Deploy an open source LLM on LM Studio on your pc or mac without an internet connection. The files in this video include the function to initiate a conversation with the local-model and establishes roles and where the instructions come from. The setup allows the script to dynamically read the system message from text file, making it easy to update the system message, system prompt or pre-prompt (known in Chat GPT as custom instructions) without changing the script's code.- Client

- VideotronicMaker.com

- Website

- View website

Tutorial on how to use LM Studio without the Chat UI using a local server. Deploy an open source LLM on LM Studio on your pc or mac without an internet connection. The files in this video include the function to initiate a conversation with the local-model and establishes roles and where the instructions come from. The setup allows the script to dynamically read the system message from text file, making it easy to update the system message, system prompt or pre-prompt (known in Chat GPT as custom instructions) without changing the script's code.- Tutorial on how to use LM Studio without the Chat UI using a local server. Deploy an open source LLM on LM Studio on your pc or mac without an internet connection. The files in this video include the function to initiate a conversation with the local-model and establishes roles and where the instructions come from. The setup allows the script to dynamically read the system message from text file, making it easy to update the system message, system prompt or pre-prompt (known in Chat GPT as custom instructions) without changing the script's code.